We use analytics and cookies to understand site traffic. Information about your use of our site is shared with Google for that purpose.You can read our privacy policies and terms of use etc by clicking here.

NVIDIA Triton

NVIDIA Triton Server

We will:

- Deploy an image classification model on NVIDIA Triton with GPUs

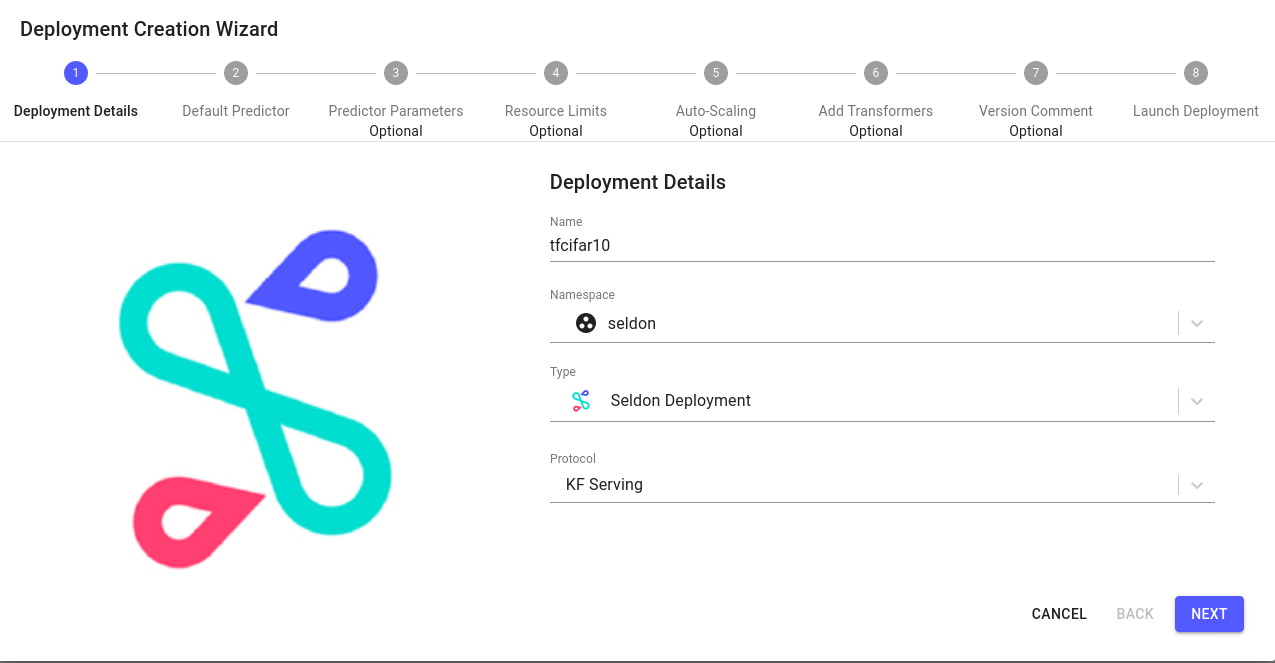

Deploy Model

For this example choose cifar10 as the name and use the KFServing protocol option.

For the model to run we have created several image classification models from the CIFAR10 dataset.

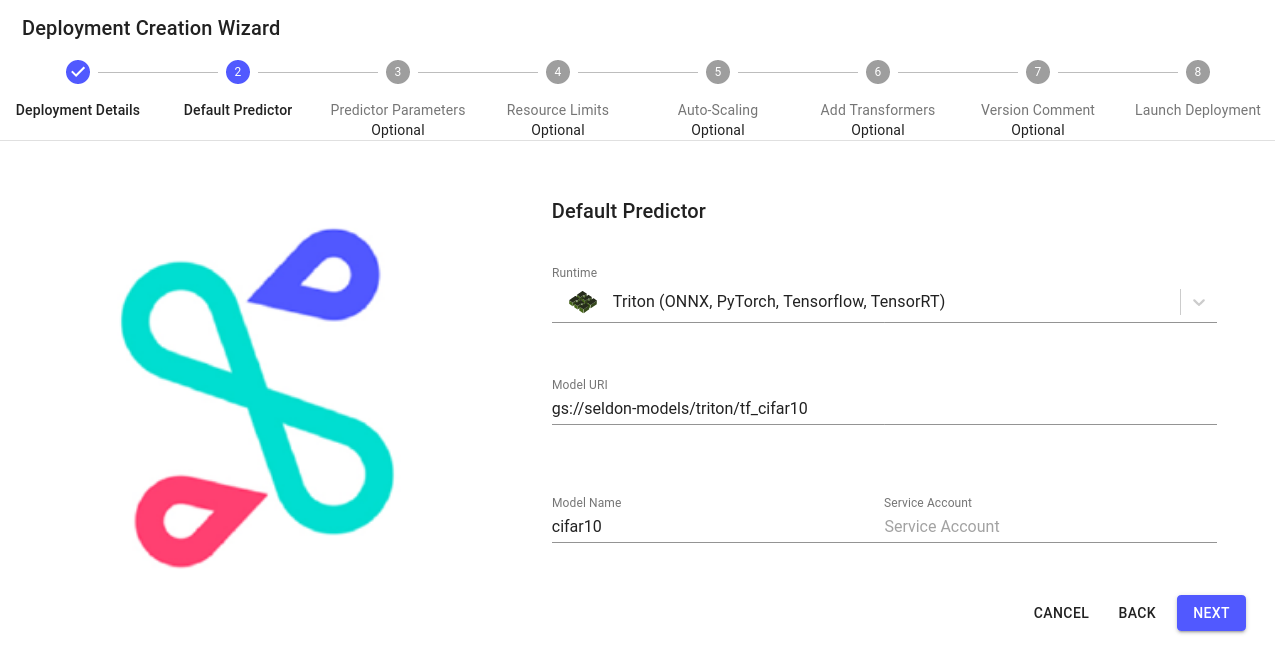

- Tensorflow Resnet32 model:

gs://seldon-models/triton/tf_cifar10 - ONNX model:

gs://seldon-models/triton/onnx_cifar10 - PyTorch Torchscript model:

gs://seldon-models/triton/pytorch_cifar10

Choose one of these and select Triton as the server. Customize the model name to that of the name of the model saved in the bucket for Triton to load.

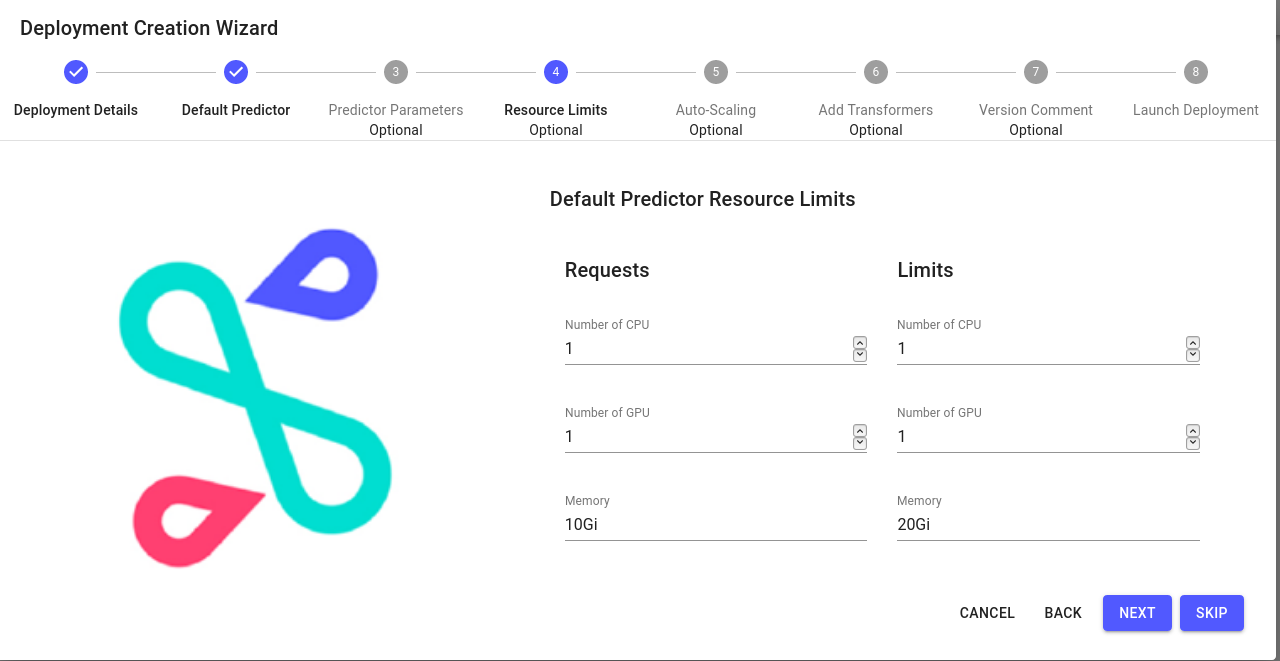

Next, on the resources screen add 1 GPU request/limit assuming you have these available on your cluster and ensure your have provided enough memory for the model. To determine these settings we recommend you use the NVIDIA model analyzer.

When ready you can test with images. The payload will depend on the model from above you launched.